English, the world’s most widely spoken language, is a linguistic marvel—a testament to humanity’s ability to adapt, borrow, and evolve. It has absorbed elements from Latin, French, Germanic, and other linguistic families, creating a vast and versatile vocabulary. Yet, beneath this charm lies a chaotic system riddled with inconsistencies, ambiguities, and irregularities.

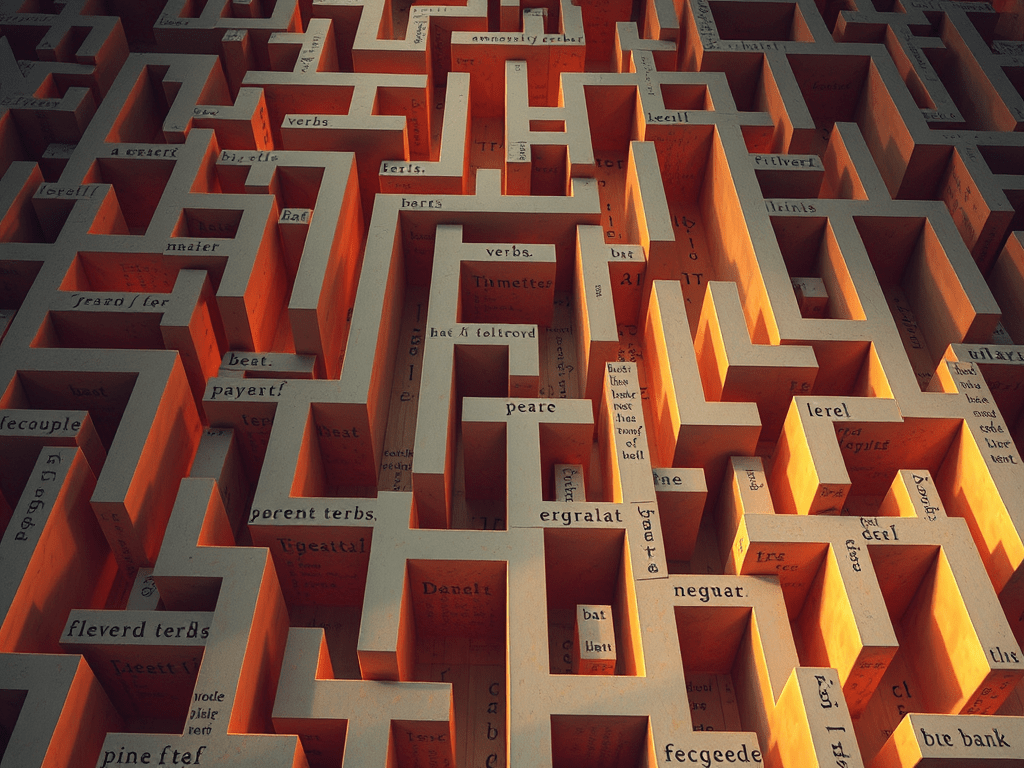

While humans navigate these complexities intuitively, machines learning English face a labyrinth of challenges, requiring immense computational effort to achieve even modest accuracy.

Let’s explore why English is a nightmare for NLP systems and how its quirks complicate computational linguistics.

1. Irregular Verbs: The Enemy of Consistency

English verbs often defy predictable conjugation patterns. For learners and machines alike, irregular verbs are a source of confusion because they don’t follow standard rules of tense formation.

• Examples of Irregularity:

• “Go” → “Went”: Why doesn’t it follow the expected pattern and become “goed”?

• “Run” → “Ran” → “Run”: The present tense and past participle are identical, but the past tense shifts to a completely different form.

• “Eat” → “Ate” → “Eaten”: A seemingly arbitrary vowel change occurs in different tenses.

Contrast this with regular verbs:

• “Walk” → “Walked” → “Walked”: A simple and predictable rule applies across all tenses.

For NLP systems, irregular verbs require extensive memorization and additional rules, increasing computational complexity. While humans learn these exceptions through immersion, machines rely on large datasets to identify and predict such patterns, often leading to errors when faced with uncommon verbs.

2. Ambiguous Words: One Word, Many Meanings

English is teeming with homonyms and polysemous words—single words that have multiple unrelated or context-dependent meanings. This ambiguity is a significant obstacle for NLP systems.

• Homonyms (Same Word, Different Meanings):

• “Bank”:

• “I deposited money at the bank.” (financial institution)

• “We had a picnic on the bank of the river.” (riverbank)

• “Bat”:

• “The bat flew out of the cave.” (flying mammal)

• “He hit the ball with a bat.” (sports tool)

Without explicit context, even humans can misunderstand these sentences. For machines, disambiguating meanings requires probabilistic models trained on vast datasets, which attempt to infer meaning based on surrounding words.

• Polysemy (Related but Distinct Meanings):

• “Light”:

• “Turn on the light.” (illumination)

• “This bag is light.” (not heavy)

• “Spring”:

• “He jumped over the spring.” (mechanical object)

• “The flowers bloom in spring.” (season)

NLP systems must rely on word sense disambiguation (WSD) algorithms, which attempt to predict meaning based on context. These models, however, are far from perfect and often fail with less common usages or nuanced meanings.

3. Silent Letters: A Phonetic Puzzle

English spelling often doesn’t reflect its pronunciation, thanks to historical changes in the language. Silent letters add another layer of inconsistency, confounding both learners and machines.

• Examples of Silent Letters:

• “Knife” (Silent “k”): The “k” sound was pronounced in Old English, but modern English dropped it.

• “Psychology” (Silent “p”): Derived from Greek, the initial “p” is silent in English pronunciation.

• “Wednesday” (Silent “d”): The “d” is dropped in everyday speech, though it remains in the spelling.

NLP systems processing text-to-speech or speech-to-text must include complex phonetic models to account for these anomalies. Errors are common when silent letters aren’t anticipated, especially in less frequent words.

4. Spelling and Pronunciation Disconnect

Unlike languages like Sanskrit or Spanish, where spelling directly corresponds to pronunciation, English often breaks this fundamental rule. This disconnect further complicates NLP tasks like speech recognition and text-to-speech synthesis.

• Same Spelling, Different Pronunciations:

• “Read” (Present: reed, Past: red):

• “I read books every day.” (reed)

• “I read that book yesterday.” (red)

• “Tear” (Rip: tehr, Drop: teer):

• “Tear the paper.” (tehr)

• “She shed a tear.” (teer)

• Same Pronunciation, Different Spelling:

• “Bare” vs. “Bear”: Pronounced the same but spelled differently based on meaning.

• “Two,” “Too,” and “To”: Phonetically identical but grammatically distinct.

NLP systems must include probabilistic phonetic models to predict pronunciation accurately, but these models often struggle with exceptions and context-specific rules.

5. Word Order Determines Meaning

English relies heavily on word order to convey meaning, making it rigid compared to languages like Sanskrit. Changing the order of words often alters the meaning entirely.

• Examples:

• “The dog chased the cat.”

• Subject: Dog, Object: Cat.

• “The cat chased the dog.”

• Subject: Cat, Object: Dog.

In both sentences, the grammatical roles of “dog” and “cat” depend entirely on their position. This rigidity increases the computational burden for NLP systems, as they must rely on syntactic parsing to identify roles.

6. Dialectal Variations

English’s global spread has led to significant variations in pronunciation, vocabulary, and grammar between dialects, adding another layer of complexity.

• Pronunciation Differences:

• “Schedule”:

• British English: shedule.

• American English: skedule.

• “Tomato”:

• British English: tuh-mah-to.

• American English: tuh-may-to.

• Vocabulary Variations:

• “Lift” (British) vs. “Elevator” (American).

• “Torch” (British) vs. “Flashlight” (American).

For NLP systems, accommodating these variations requires training on region-specific data, further increasing resource requirements.

The Computational Cost of English

Processing English in NLP systems involves tackling these challenges head-on:

1. Extensive Training Data: Machines need to learn exceptions, dialectal variations, and idiomatic usage.

2. Complex Probabilistic Models: These are essential for resolving ambiguities, predicting context, and handling irregularities.

3. Significant Computational Power: Handling the sheer volume of inconsistencies in English requires large-scale hardware and processing time.

Even with advanced NLP models like GPT or BERT, errors in understanding, generating, or translating English are common. The language’s chaotic nature ensures that it remains an expensive and inefficient choice for computational systems.

Conclusion: English’s Legacy in Chaos

While English is beloved for its adaptability and expressiveness, its quirks make it one of the least efficient languages for NLP. Machines must work overtime to process its irregularities, ambiguities, and inconsistencies. In the next section, we’ll explore how Sanskrit offers a perfect antidote—a language designed with clarity and precision that aligns seamlessly with the needs of NLP systems.

Leave a comment